In the fast-paced world of artificial intelligence, the emergence of 1-bit(LLMs) heralds a transformative era. These groundbreaking models are redefining efficiency, speed, and sustainability in AI technologies, promising a future where digital interactions are more intuitive, and technology is more accessible. Here, we dive deep into the world of 1-bit LLMs, exploring seven key insights that highlight their potential to reshape our digital landscape. This exploration not only delves into the technical marvels of 1-bit LLMs but also brings a touch of humor to lighten the complex world of AI.

Introduction to 1-Bit LLMs: The Pinnacle of AI Efficiency

1-bit LLMs represent a significant stride toward optimizing AI’s efficiency and sustainability. By compressing data to its binary form, these models ensure minimal computational resource use, paving the way for a more accessible and environmentally friendly approach to AI.

Explaination:

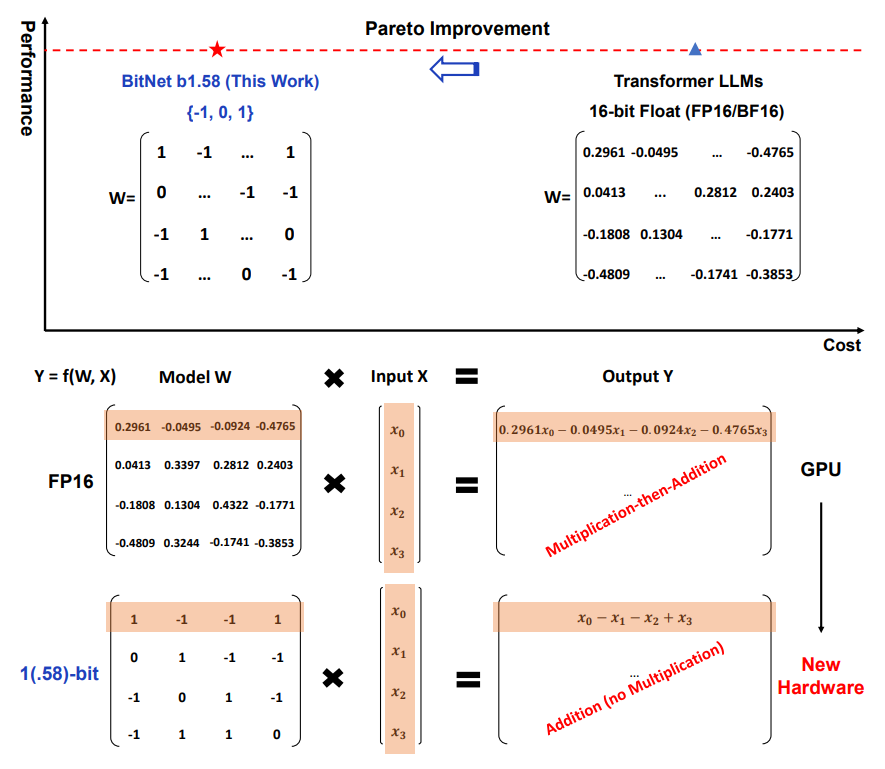

On the left side, the BitNet b1.58 is depicted with a matrix consisting of three possible values (-1, 0, 1), which indicates a ternary bit representation, as opposed to the standard 16-bit floating-point representation on the right. The ternary representation allows for simplified calculations, which could potentially use new hardware optimized for these types of operations. This is shown at the bottom left, where matrix multiplication involves simple addition without the need for complex multiplication operations. This suggests that the model can perform calculations using simpler and potentially more energy-efficient hardware.

In contrast, the traditional Transformer LLMs on the right side use a 16-bit floating-point representation for weights in the model, as shown in the matrix W. This representation requires more complex calculations, typically performed on GPUs, as illustrated by the “Multiplication-then-Addition” process.

The center of the image demonstrates the functional process of both models, where the model W (weights) is multiplied by the input X to produce the output Y. For the FP16 model, the output is a result of a multiplication of the input values with their corresponding weights followed by addition. For the BitNet b1.58, the output is obtained by simple addition and subtraction, as indicated by the equation x0 - x1 - x2 + x3.

The top part of the image shows a performance vs. cost graph with a dashed red line indicating the current performance standard. The blue arrow labeled “Pareto Improvement” points from the new BitNet b1.58 model towards the Transformer LLMs, suggesting that the new model achieves better performance at a lower cost.

1.The Essence of 1-Bit LLMs: A Deep Dive

1-bit Language Learning Models (LLMs) stand at the cutting edge of artificial intelligence, embodying a radical shift towards minimalist, yet profoundly impactful computational strategies. At their core, these models leverage a principle that might seem simplistic at first glance but is revolutionary in its application: the transformation of complex, multi-dimensional data into a binary format. This section aims to peel back the layers of this transformative approach, elucidating how it significantly enhances processing speed, slashes energy consumption, and democratizes AI technology.

2. Speed and Efficiency Redefined

The crux of the 1-bit approach lies in its unparalleled efficiency. By reducing data to binary form, the amount of computation needed for each operation plummets. This efficiency translates into dramatically faster processing speeds, enabling 1-bit LLMs to perform tasks, from language translation to content generation, at a pace that outstrips traditional models. Moreover, this speed does not come at the expense of accuracy. Advanced algorithms and error correction techniques ensure that these models maintain high levels of performance, even with the reduced data complexity.

3. Economic Viability

The economic implications of 1-bit LLMs extend far beyond mere cost savings. By drastically reducing the computational resources required for training and running AI models, 1-bit LLMs democratize the field of artificial intelligence, making cutting-edge technology accessible to a wider array of innovators and creators. This democratization is not just about enabling smaller entities to participate in AI development; it’s about leveling the playing field, where the quality of ideas, rather than the depth of pockets, dictates the pace of innovation.

4. A Step Towards Sustainable AI

The journey towards sustainable AI is not just a technological challenge; it’s an environmental imperative. As the digital age advances, the environmental footprint of AI technologies has become a pressing concern. Enter 1-bit Language Learning Models (LLMs), a beacon of hope in the quest for green AI. These models stand out not merely for their computational efficiency but for their potential to significantly reduce the energy consumption associated with AI operations, marking a pivotal shift towards eco-friendly technology solutions.

5. Enhancing Global Accessibility

The advent of 1-bit LLMs marks a significant milestone in making AI technologies more accessible worldwide, especially in technologically underserved regions. This breakthrough addresses a critical challenge in the global digital landscape: the digital divide. By enabling advanced AI tools to run on less powerful hardware, 1-bit LLMs ensure that the benefits of AI are not confined to high-income countries or individuals but are spread across the globe, reaching communities that previously faced barriers to access.

6. Challenges Ahead: Accuracy and Effectiveness

The journey toward refining 1-bit Language Learning Models (LLMs) is paved with significant challenges, especially when it comes to balancing the revolutionary efficiency gains with the need for high accuracy and effectiveness. The core of these challenges lies in the intrinsic trade-offs introduced by data compression techniques central to 1-bit models. Simplifying complex information into a binary format inevitably raises concerns about the model’s ability to capture and interpret the nuances of language, a critical factor for tasks requiring high levels of precision, such as natural language understanding, sentiment analysis, and machine translation.

7. The Future Landscape of AI

The development of 1-bit LLMs signifies a promising direction for AI, with potential applications ranging from enhanced natural language processing to more efficient automated services. As the technology evolves, it is set to usher in a new era of innovation and accessibility in AI.

Conclusion

The advent of 1-bit LLMs marks a pivotal moment in the quest for more efficient, accessible, and sustainable AI technologies. While challenges remain, the potential benefits of these models make them a cornerstone for the next generation of AI development. As we continue to explore the possibilities, the blend of technical innovation and a commitment to positive impact promises a future where AI is not just more powerful but also more equitable and environmentally conscious.

For more information visit: https://analyticsindiamag.com/microsoft-introduces-1-bit-llm/

For more amazing blogposts, Visit : https://trulyai.in

Leave a Reply